Deep Learning and Image Processing

Deep Learning and Image Processing

Deep Learning and Image Processing

Overview

Have you ever seen old monochrome pictures (most often grayscale) which have several artefacts, that are then colorised and made to look as if they were taken only recently with a modern camera? That is an example of image restoration, which can be more generally defined as the process of retrieving the underlying high quality original image given a corrupted image.

A number of factors can affect the quality of an image, with the most common being suboptimal photographing conditions (e.g. due to motion blur, poor lighting conditions), lens properties (e.g. noise, blur, lens flare), and post-processing artefacts (e.g. lossy compression schemes, which are methods that perform compression in such a way that it cannot be reversed and thus leads to a loss of information).

Another factor that can affect image quality is resolution. More specifically, low-resolution (LR) images contain a low number of pixels representing an object of interest, making it hard to make out the details. This can be either because the image itself is small, or because an object is far away from the camera thereby causing it to occupy a small area within the image.

Basic Concepts of Image Quality

Super-Resolution (SR) is a branch of Artificial Intelligence (AI) that aims to tackle this problem, whereby a given LR image can be upscaled to retrieve an image with higher resolution and thus more discernible details that can then be used in downstream tasks such as object classification, face recognition, and so on. Sources of LR images include cameras that may output low-quality images, such as mobile phones and surveillance cameras.

However before we dive into Super Resolution, let’s look at some very basic image concepts below :

01

What is an Image?

An image is a function that consists of two real variables, that is, coordinates x and y. This function represents the brightness (or color) at a point with the coordinates x and y. Usually, x and y refer to the horizontal and vertical axes, respectively.

02

What is a digital image?

When you have a finite x and y, we call this function, f, as a digital image. In other words, a digital image is a representation of a two-dimensional image as a finite set of digital values, which are called pixels. The digital image contains a fixed number of rows and columns of pixels.

03

Enhanced Customer Satisfaction

The digital image contains a fixed number of rows and columns, and each combination of these coordinates contains a value that represents the color and the intensity of the image. They are also known as picture elements, image elements, and pixels. Also, you might see its abbreviations as px or pel (for picture element).

04

What is Color?

Let’s say we are using an RGB color model and want to represent our color image with the model’s components. In the RGB model, we need to separate red, green, and blue components as different color planes (as shown in the following image). However, when we represent a pixel value that is the color, we combine these three values (tuple with three elements).

05

What are Binary Images?

Binary images are the simplest type of images because they can take only two values: black or white, respectively, 0 or 1. This type of image is referred to as a 1-bit images because it’s enough to represent them with one binary digit.

06

What are GrayScale Images?

As the name implies, the image contains gray-level information and no color information. The typical grayscale image contains 8-bit/pixel (bpp) data. In other words, this type of image has 256 different shades of colors in it, varying from 0 – 255, where 0 is black and 255 is white.

07

What are Color Images?

All grayscale and binary images are monochrome because they are made of varying shades of only one color. However, not all monochrome images are grayscale because monochromatic images can be made of any color. Therefore, color images are made of three-band monochrome images. These 8-bit monochrome images represent red, green, and blue (RGB), which means that this type of image has 24 bits/pixel (8 bit for each color band).

08

What are Indexed Images?

Indexed images are widely used in GIF image formats. The purpose is instead of using a large color range (in RGB, we have 256x256x256 = 16777216), we can use a limited number of colors. Every pixel in a GIF image uses a color index.

What are the different stages of Image Processing?

This section covers the main stages of image processing so that you understand what operation can be done with this field and what outputs will be generated after each operation.

01

Image acquisition:

As the name implies, this first key step of digital image processing aims to acquire images in a digital form. It includes preprocessing such as color conversion and scaling.

02

Image enhancement:

This stage’s main purpose is to extract more detailed information from an image or interested objects by changing the brightness, contrast, and so on.

03

Image restoration:

The purpose of image restoration is to recover defects that degrade an image. There might be many reasons to degrade an image such as camera misfocus, motion blur, or noise. Restoration gives you the ability to reform these distorted parts for more useful data

04

Color image processing:

This processing is focused on how humans perceive color, that is, how we can arrange the colors of images as wanted. We can do color balancing, color correction, and auto-white balance with color processing.

05

Wavelets and multi-resolution processing:

This is the foundation of representing images in various degrees.

06

Wavelets and multi-resolution processing:

Morphological processing processes images based on shapes to gain more information about the useful parts of the image. Tasks can be summarized as dilation, erosion, and so on.

07

Segmentation:

This is one of the most commonly used techniques in image processing. The aim is partitioning an image into multiple regions, often based on the characteristics of the pixels in the image, which generally refers an object

Applications of Image Super Resolution

Image Super-Resolution Essentials

Super-Resolution refers to increasing the resolution of an image. Suppose an image is of resolution (i.e., the image dimensions) 64×64 pixels and is super-resolved to a resolution of 256×256 pixels. In that case, the process is known as 4x upsampling since the spatial dimensions (height and width of the image) are upscaled four times.

Now, a Low Resolution (LR) image can be modeled from a High Resolution (HR) image, mathematically, using a degradation function, delta, and a noise component, eta as the following:

In supervised learning methods, models aim to purposefully degrade the image using the above equation and use this degraded image as inputs to the deep model and the original images as the HR ground truth. This forces the model to learn a mapping between a low-resolution image and its high-resolution counterpart, which can then be applied to super-resolve any new image during the testing time.

Single-Image vs. Multi-Image Super-Resolution

Two types of Image SR methods exist depending on the amount of input information available. In Single-Image SR, only one LR image is available, which needs to be mapped to its high-resolution counterpart. In Multi-Image SR, however, multiple LR images of the same scene or object are available, which are all used to map to a single HR image.

In Single-Image SR methods, since the amount of input information available is low, fake patterns may emerge in the reconstructed HR image, which has no discernible link to the context of the original image. This can create ambiguity which in turn can lead to the misguidance of the final decision-makers (scientists or doctors), especially in delicate application areas like bioimaging.

Two types of Image SR methods exist depending on the amount of input information available. In Single-Image SR, only one LR image is available, which needs to be mapped to its high-resolution counterpart. In Multi-Image SR, however, multiple LR images of the same scene or object are available, which are all used to map to a single HR image.

Evaluation Techniques for Super Resolution Methods

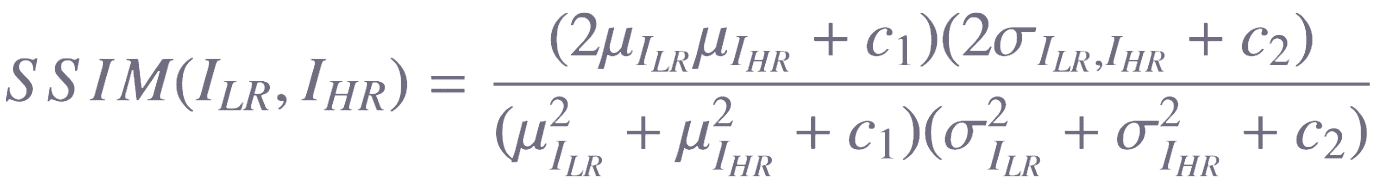

Peak Signal-to-Noise Ratio (PSNR) and Structural SIMilarity (SSIM) Index are the two most commonly used evaluation metrics for evaluating SR performance. PSNR and SSIM measures are generally both used together for a fair evaluation of methods compared to the state-of-the-art.

PSNR

Peak Signal-to-Noise Ratio or PSNR is an objective metric that measures the quality of image reconstruction of a lossy transformation. Mathematically it is defined by the following:

Here, “MSE” represents the pixel-wise Mean Squared Error between the images, and “M” is the maximum possible value of a pixel in an image (for 8-bit RGB images, we are used to M=255). The higher the value of PSNR (in decibels/dB), the better the reconstruction quality.

SSIM

The Structural SIMilarity (SSIM) Index is a subjective measure that determines the structural coherence between two images (the LR and super-resolved HR images in this case). Mathematically it can be defined as follows:

SSIM ranges between 0 and 1, where a higher value indicates greater structural coherence and thus better SR capability.

Conclusion

Image Super-Resolution, which aims to enhance the resolution of a degraded/noisy image, is an important Computer Vision task due to its enormous applications in medicine, astronomy, and security. Deep Learning has largely aided in the development of SR technology to its current glory. Multi-Image and Single-Image SR are the two distinct categories of SR methods. Multi-Image SR is computationally expensive but produces better results than Single-Image SR, where only one LR image is available for mapping to the HR image. In most applications, Single-Image SR resembles real-world scenarios more closely. However, in some applications, like satellite imaging, multiple frames for the same scene (often at different exposures) are readily available, and thus Multi-Image SR is used extensively.

Have an Inquiry?

Nilesh P

Nilesh is an avid Blockchain programmer and specializes in ML based algorithms using Python. He is part of the back end practice at AARCHIK Solutions